Mar 2, 2020

How To Make Your Website Work Offline part 2

Your dynamic content can be made available offline as well

In my previous article I showed you how you can easily make your static website work offline by using a service worker.

But most websites today don’t only exist of static content, but dynamic content as well. This content can come from a REST API for example and this type of content won’t normally be available when a client is offline.

Luckily, browsers provide a solution for that and service workers have got you covered because you can cache that too.

Caching dynamic content

Whenever a request to the API is made we can cache the response for later use. If the same request is then made again and it fails for whatever reason, we just serve the response we cached earlier.

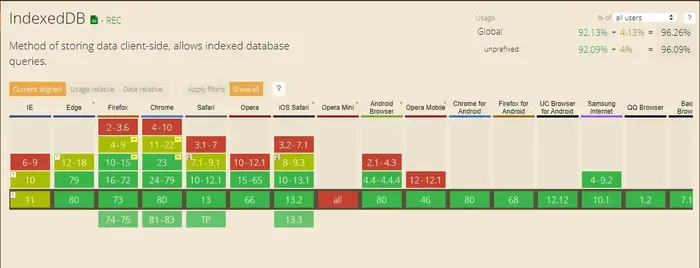

These responses can be cached using IndexedDB, which is a object-oriented client-side key-value store that can persist large amounts of structured data. While localStorage and sessionStorage are useful for storing small amounts of data, IndexedDB is more useful for large amounts.

IndexedDB has excellent browser support:

Since all data in IndexedDB is indexed with a key, it provides high performance search of this data which makes it a good fit to cache API responses.

Here’s the fetch event handler which takes care of caching our API requests and responses:

self.addEventListener('fetch', e => {

const {method, url} = e.request;

const requestClone = e.request.clone();

// if the url of the request contains '/api', it's a call to

// our api

if(url.includes('/api')) {

e.respondWith(

fetch(e.request)

.then(response => {

const responseClone = response.clone();

// here we cache the response for future use

if(method === 'GET') {

cacheApiResponse(responseClone);

} return response;

})

.catch(err => {

// something went wrong in calling our api, let serve

// the response we cached earlier

if(method === 'GET') {

return getCachedApiResponse(e.request);

}

// if it was a POST request we tried to write something

// to our api.

// let's save the request and try again later

if(method === 'POST') {

cacheApiRequest(requestClone);

return new Response(JSON.stringify({

message: 'POST request was cached'

}));

}

})

);

}

else {

e.respondWith(

caches.match(e.request)

.then(response => response ? response : fetch(e.request))

);

}

});

Let’s say the URL of the API is https://www.oursite.com/api so when the url of the request contains /api we know it’s a call to the API. We then just pass it through by calling e.respondWith with fetch(e.request) which basically just forwards the same request.

When the response arrives it needs to be cloned and then it’s saved to IndexedDB with the cacheApiResponse method (implementation below) and then served.

However, when an error occurs while fetching and the Promise returned from fetch(e.request) rejects, we catch the error and serve an API response that was cached earlier with getCachedApiResponse(e.request).

This way we can assure that calls for dynamic content will also succeed even when users are offline or the API is unreachable for other reasons.

Automatic synchronization

Now the examples above were all concerning GET requests to fetch data, but what if you need to do POST requests to persist data in the backend?

As you can see there is a check for a POST request in the catch clause in the example above:

.catch(err => {

... if(method === 'POST') {

cacheApiRequest(requestClone);

return new Response(JSON.stringify({

message: 'POST request was cached'

}));

}

})

This means that whenever a POST request to the API fails, due to the user being offline for example, a clone of the request is saved using the cacheApiRequest method (implementation below) and a custom response is returned, indicating that the POST request was saved.

This allows us to save all changes that were made to IndexedDB and send these changes to the backend later when the user is back online.

Whenever the user’s connection is recovered, a sync event will be fired and we can retry the previously made API calls:

self.addEventListener('sync', e => {

e.waitUntil(retryApiCalls());

});

Preloading responses

So far we have seen how to serve static assets that were previously cached and how to save API responses to serve them later from cache in case the API is not available or the user is offline.

But API calls that are made to fetch dynamic content will have to be made at least once first so they can be cached for successive calls. This means that any API call that was not made first will not be cached and will therefore not be available when the user is offline.

If your website consists of static HTML pages only, you can cache these in the install event by feeding them to the cache.addAll() call:

const filesToCache = [

'/index.html',

'/about.html',

'/blog/posting.html'...

];self.addEventListener('install', e => {

e.waitUntil(

caches.open(cacheName)

.then(cache => cache.addAll(filesToCache))

);

});

We can actually do the same for any or certain API calls that will be made from our website to prefetch content. For example, if your site is a blog you could prefetch your most recent or popular postings upfront so they will be instantly available, even when the user is offline.

The user only needs to visit one page of your site and when the service worker is activated, we prefetch the content we want. The right place for this is the activate event of the service worker:

self.addEventListener('activate', e => {

... const postings = [

'/api/blog/1'

'/api/blog/12'

'/api/blog/7'

];

e.waitUntil(async () => {

await Promise.all(postings.map(url => prefetch(url)));

}());

});

const prefetch = async url => {

const response = await fetch(url);

const clone = response.clone();

cacheApiResponse(clone);

};

Inside the activate event we iterate over an array containing say, the URLs of our most popular blog postings. Each posting is then fetched in the background and stored using the cacheApiResponse method.

Now we are able to serve all these postings from cache so they will be immediately available without requiring a network call.

Not only is your website now fully available offline, it will also load near instantly, giving users an app-like experience.

I advice you to dive into IndexedDB to learn its API. It’s quite simple and easy to learn.

Here’s the complete service-worker with explanations in the comments:

const version = 1;

const cacheName = `our_awesome_cache`

// the static files we want to cache

const staticFiles = [

'index.html',

'src/css/styles.css',

'src/img/logo.jpg',

'src/js/app.js'

];

// here we configure IndexedDB with two stores: one for api requests and one for api responses

// 'keyPath' is the key we use to retrieve the request or response, in this case it's the url

// of the request or response

const IDBConfig = {

name: 'my_awesome_idb',

version,

stores: [

{

name: 'api_requests',

keyPath: 'url'

},

{

name: 'api_responses',

keyPath: 'url'

}

]

};

// here we create our IndexedDB database

const createIndexedDB = ({name, version, stores}) => {

const request = self.indexedDB.open(name, version);

return new Promise((resolve, reject) => {

request.onupgradeneeded = e => {

const db = e.target.result;

// here we loop over our stores (one for api requests, one for api responses)

// we check if they already exist. If not, they are created

stores.map(({name, keyPath}) => {

if(!db.objectStoreNames.contains(name)) {

db.createObjectStore(name, {keyPath});

}

});

};

request.onsuccess = () => resolve(request.result);

request.onerror = () => reject(request.error);

});

};

// here we prefetch the api call and cache the response so we can serve it later

const prefetch = async url => {

const response = await fetch(url);

// since a response can only be used once, we clone it to store it and then serve the original response

const clone = response.clone();

const json = await clone.json();

cacheApiResponse({url, json});

return response.json();

};

// factory function to get the correct store

// we pass it the name and version of the IndexedDB database name and version and it returns

// a function that we pass the name of the correct store (api requests or api responses)

const getStoreFactory = (dbName, version) => ({name}, mode = 'readonly') => {

return new Promise((resolve, reject) => {

const request = self.indexedDB.open(dbName, version);

request.onsuccess = e => {

const db = request.result;

const transaction = db.transaction(name, mode);

const store = transaction.objectStore(name);

return resolve(store);

};

request.onerror = e => reject(request.error);

});

};

// function to open the correct store, uses the factory function

const openStore = getStoreFactory(IDBConfig.name, version);

// function that caches a POST request made to our api so we can retry it when we're back online

const cacheApiRequest = async request => {

const headers = [...request.headers.entries()].reduce((obj, [key, value]) => Object.assign(obj, {[`${key}`]: value}), {});

const body = await request.text();

const serialized = {

headers,

body,

url: request.url,

method: request.method,

mode: request.mode,

credentials: request.credentials,

cache: request.cache,

redirect: request.redirect,

referrer: request.referrer

};

const requestStore = await openStore(IDBConfig.stores[0], 'readwrite');

requestStore.add(serialized);

};

// here we cache the api responses so we can use them later if needed when offline

const cacheApiResponse = async response => {

try {

const store = await openStore(IDBConfig.stores[1], 'readwrite');

store.add(response);

}

catch(error) {

console.log('idb error', error);

}

};

// here we return a cached api response whenever our api is not available

const getCachedApiResponse = request => {

return new Promise((resolve, reject) => {

openStore(IDBConfig.stores[1])

.then(store => {

const cachedRequest = store.get(request.url);

// when there's no error the 'onsuccess' handler is called, but that doesn't mean we have a response in our cache

// we still have to check if the result is not 'undefined'

cachedRequest.onsuccess = e => {

return cachedRequest.result === undefined ? resolve(null) : resolve(new Response(JSON.stringify(cachedRequest.result.json)));

};

cachedRequest.onerror = e => {

console.log('cached response error', e, cachedRequest.error);

return reject(cachedRequest.error);

};

});

});

};

// here we make a call to the api and then cache the response for later use

const networkThenCache = async request => {

const {method, url} = request;

const requestClone = request.clone();

try {

const response = await fetch(request);

const json = await response.clone().json();

if(method === 'GET') {

cacheApiResponse({url, json});

}

return response;

}

// if an error occured and it was a POST call, we cache the api request so we can retry it later

// otherwise we serve a fallback response since there is no response in the cache and the network is not available

catch(e) {

return method === 'POST' ? cacheApiRequest(requestClone) : new Response(JSON.stringify({message: 'no response'}));

}

};

// here we try to serve a cached api response and if it doesn't exist we try the network

const getCachedOrNetworkApiResponse = async request => await getCachedApiResponse(request) || networkThenCache(request);

// here we retry any cached api requests that were stored earlier when the network was not available

// this is typically called when the client comes back online and the 'sync' event is fired

const retryApiCalls = () => {

return new Promise((resolve, reject) => {

openStore(IDBConfig.stores[0])

.then(store => {

const cursor = store.openCursor();

cursor.onsuccess = e => {

const cursor = e.target.result;

if(cursor) {

fetch(new Request(cursor.value.url, cursor.value))

.then(() => resolve(true));

cursor.continue();

}

};

});

});

};

// when our service worker is installed we populate the cache with our static assets

const installHandler = e => {

e.waitUntil(

caches.open(cacheName)

.then(cache => cache.addAll(staticFiles))

);

};

const activateHandler = e => {

if(self.indexedDB) {

createIndexedDB(IDBConfig);

}

e.waitUntil(async function() {

const postings = [

'/api/blog/1',

'/api/blog/12',

'/api/blog/7',

];

await Promise.all(postings.map(url => prefetch(url)));

}());

};

// our fetch handler which is called on every outgoing request

const fetchHandler = async e => {

const {request} = e;

const {url} = request;

const {pathname} = new URL(url);

// if there's a call to our api, we try to serve a cached response, otherwise we call the api

// and cache the response for later use

if(url.includes('/api')) {

e.respondWith(getCachedOrNetworkApiResponse(request));

}

// if it's a request for a static asset we serve it from the cache and when it's not cached we

// fetch it from the network

else {

e.respondWith(

caches.match(request)

.then(response => response ? response : fetch(request))

);

}

};

// when we were offline and come back online, a 'sync' event is fired

// we can now retry any api requests that were cached earlier (if any)

const syncHandler = e => {

e.waitUntil(retryApiCalls());

};

self.addEventListener('install', installHandler);

self.addEventListener('activate', activateHandler);

self.addEventListener('fetch', fetchHandler);

self.addEventListener('sync', syncHandler);

How and when to cache

In the above example service worker, we first try to serve API calls from the cache and if that fails, we try the network.

Of course we could also go to the network for all API calls and only serve a cached response when the network is not available. Other strategies are also possible, like network only or cache only for example.

In a next article we well take an in-depth look into the various caching strategies we can employ.